Quantifying the Quality of Generated Music

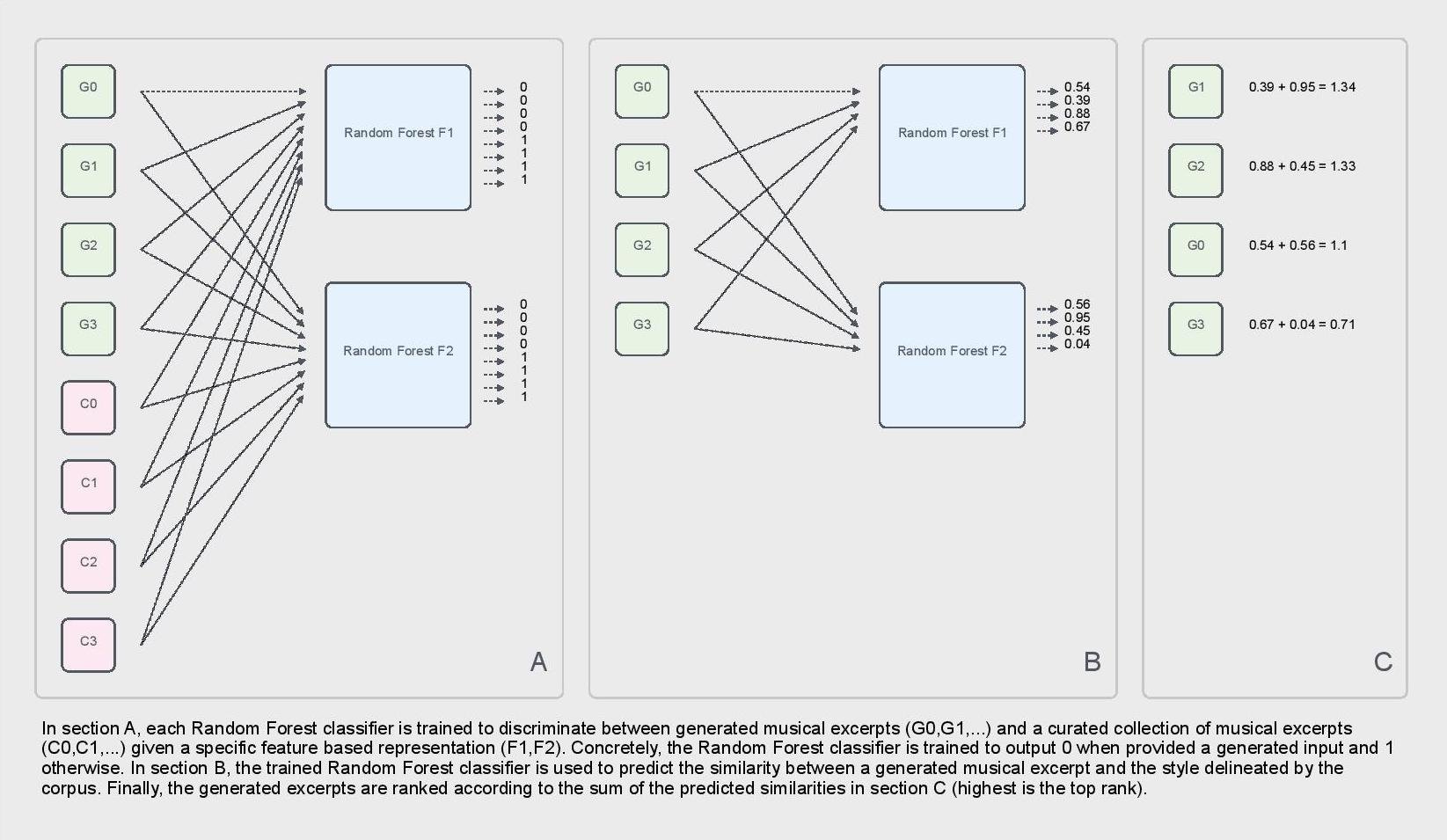

In many cases, generative models are trained on a curated collection of musical works, with the aim of teaching the generative model to compose novel music exhibiting the stylistic characteristics of the selected works. In contrast to human-composed music, which is created at a relatively fixed rate, generative models are capable of composing a large quantity of music. Unlike humans, computers do not need to take breaks, and generative models can be parallelized across several computers with relative ease. This raises issues when it comes to evaluating the quality of the music generated by these models, as humans are unable to critically listen to music for extensive periods of time. In order to address this issue, we developed quantitative methods to evaluate the quality of generated music. StyleRank ranks a collection of generated pieces based on their stylistic similarity to a curated corpus. Experimental evidence demonstrates that StyleRank is highly correlated with human perception of stylistic similarity. StyleRank can be used to compare different generative models, or to filter the output of a single generative model, discarding low-quality pieces automatically.

Jeff Ens holds a BFA in music composition from Simon Fraser University, and is currently completing a Ph.D. at the School of Interactive Art and Technology as a member of the Metacreation Lab. His research focuses on the development and evaluation of generative music algorithms.